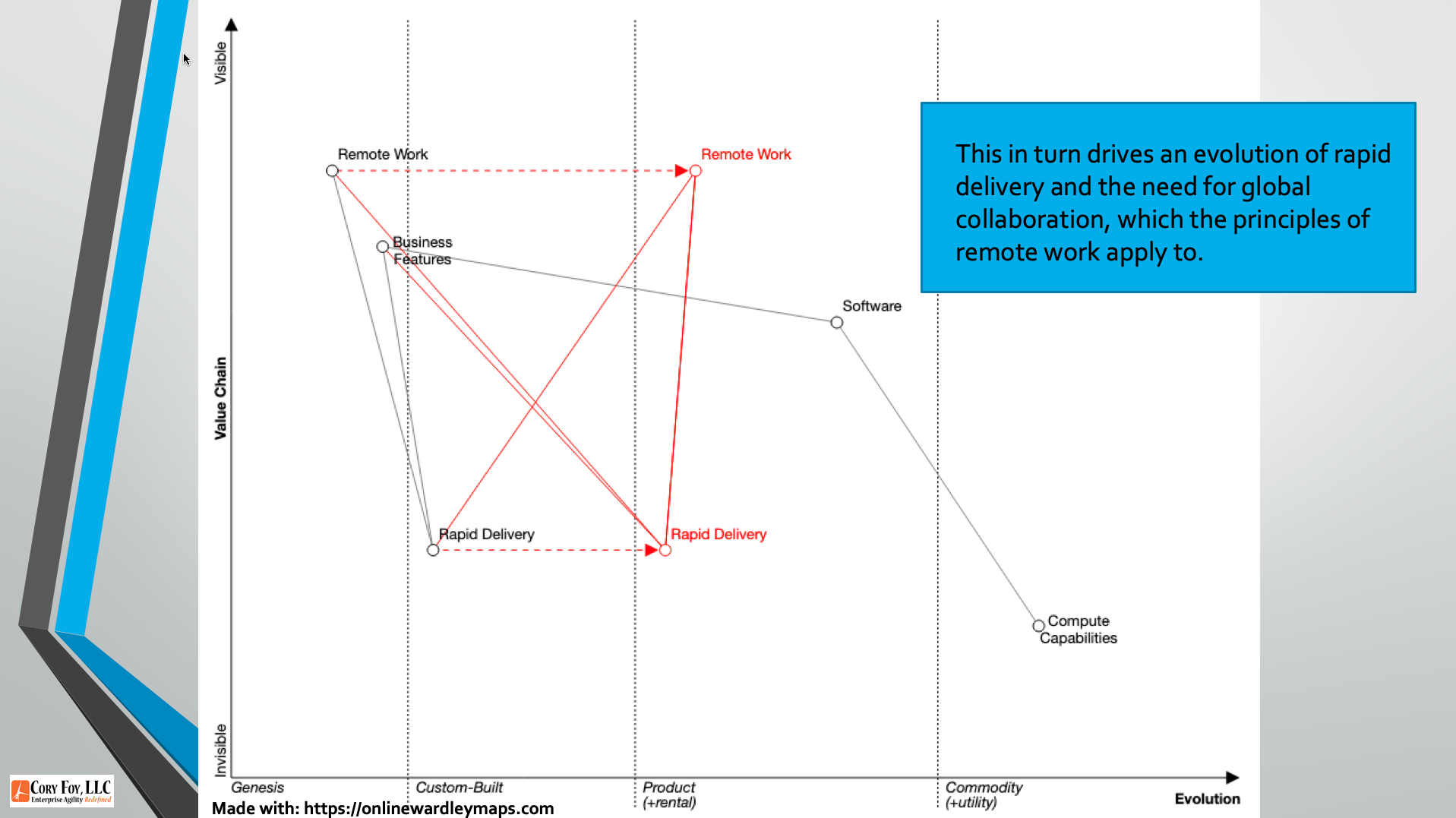

A talk from the November 2022 AgileRTP meetup covering product development with Wardley Mapping. Your browser does not support the video tag. And if you’d prefer, you can download a PDF of the slides. Key links from the video: My free Wardley Map videos and resources Simon’s Book The Wardley Map wiki The Wardley Map…

MapCamp 2020: Saving Your Business With Wardley Maps

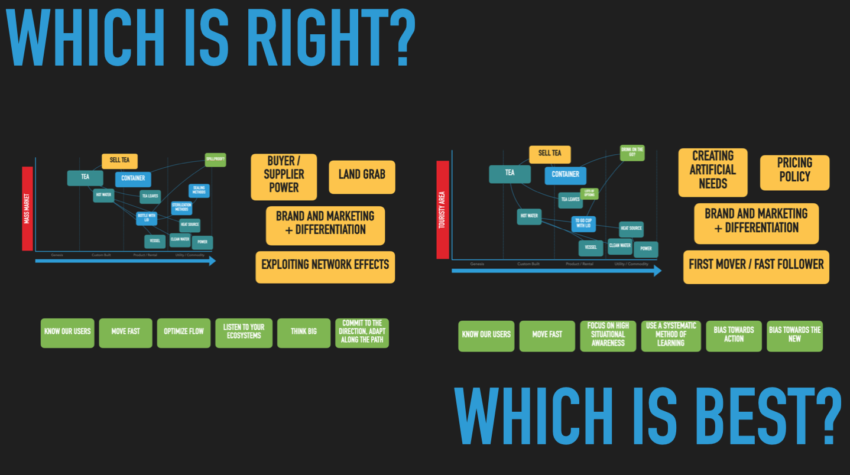

On July 29th, 2020, I had the privilege of presenting at Map Camp Germany 2020. My talk was entitled “Saving Your Business With Wardley Maps” and goes through a fictional case study based on real-world events with my clients in navigating strategic threats and situational awareness to create winning gameplays. A PDF of my slides…

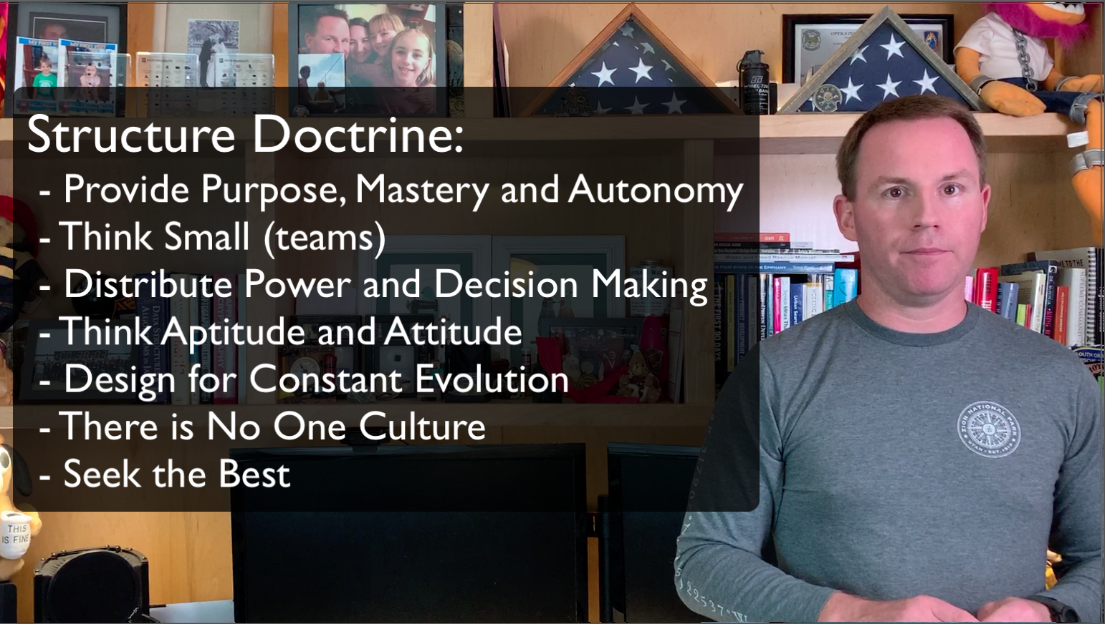

Wardley Mapping Mondays – Structure Doctrine

Find out the seven #wardleymapping doctrine principles of Org and Team Structure in this #mappingmondays video with Cory Foy

It’s Time to Act – a Response to Marc Andreessen

As promised, I’m rereading the “Build” essay by Marc Andreessen with fresh eyes. Somewhat fresh eyes since I woke up at 0430 thinking about it, but I have some tea and I’m ready to go. Let’s start from the beginning. Every Western institution was unprepared for the coronavirus pandemic, despite many prior warnings. This monumental…

Wardley Mapping Mondays – Operations Doctrine

Find out the seven #wardleymapping doctrine principles of Operations in this #mappingmondays video with Cory Foy

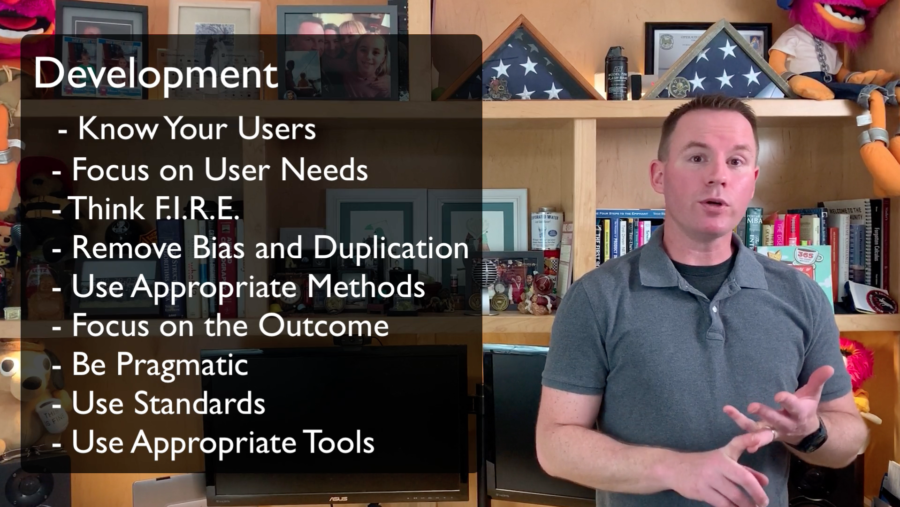

Wardley Mapping Mondays – Development Doctrine

Find out the nine #wardleymapping doctrine principles of Development in this #mappingmondays video with Cory Foy

Wardley Mapping Mondays – Communication

Find out the four doctrine principles of Communication in this #mappingmondays video with Cory Foy

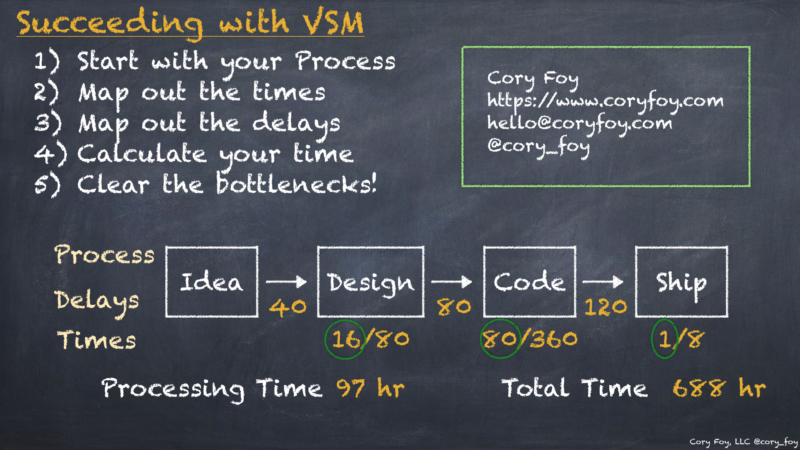

FASTER Fridays – Value Stream Mapping

Curious about Value Stream Mapping? Want to find where your real delays are? Watch this #fasterfridays video by Cory Foy to get mapping

Wardley Mapping Mondays – Market Categories and Relevant Trends

Watch this #mappingmondays video with Cory Foy to help your customers rapidly understand and want to purchase your product with Wardley Mapping and the Product Positioning Framework

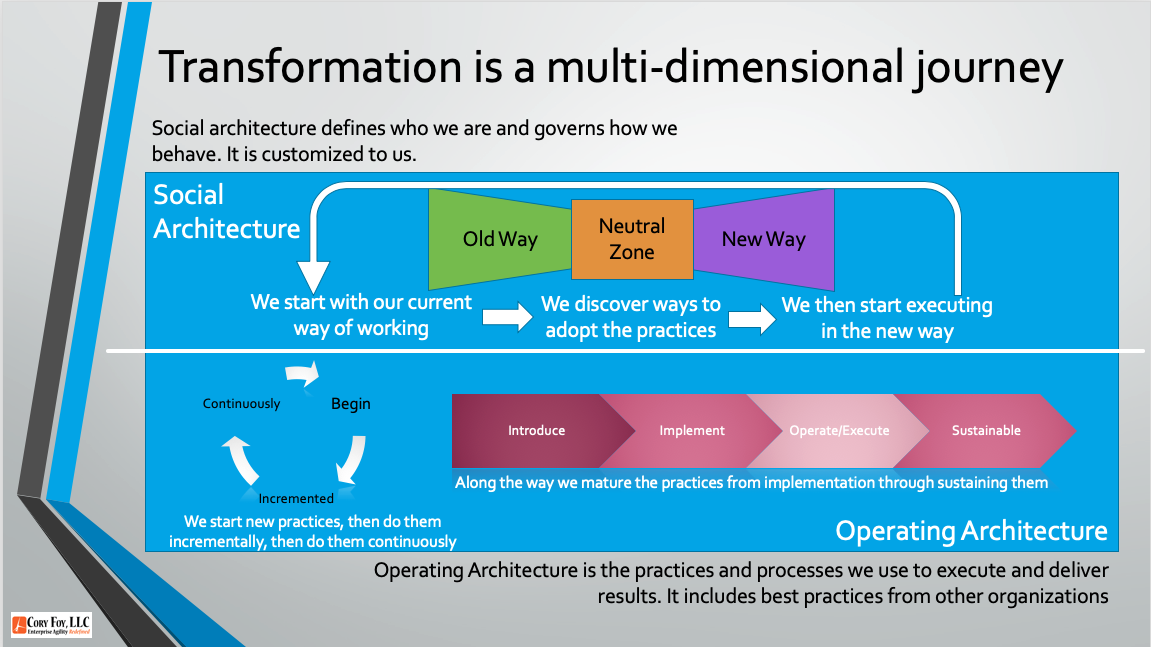

10 Questions Leaders Should Ask When Introducing Change

Find out the 10 questions you should ask as a leader before rolling out changes to your teams or organization.