A couple of weeks ago, Angela Harms posted the following Tweet:

Friend wants to help his CEO understand why XP will give better results. What links or articles would you suggest?

— Angela Harms (@angelaharms) January 16, 2014

For those of you not familiar with the concept of Extreme Programming it is one of the few agile methodologies which focuses highly on technical practices. So the question really becomes – how can we convince our management to let us do XP? The basic answer I’ve given for many years is along the lines of, “You don’t, you just do it“. Which still holds true at a team level in many cases. But what happens when you are working at a program level, or across an enterprise, to make the case to introduce technical practices?

In short, how can we sell XP?

While there are lots of ways to qualitatively sell XP practices (“Devs will work better, things will run smoother”) usually the business side is happier with quantitative data. So let’s take a hypothetical team and set up the case for XP.

The Team

Team Triscuit is a typical development team. They produce a Java-based product, and are composed of developers, analysts, testers and a Product Owner and Scrum Master. There is some test automation done, but a great deal is still manual. Builds are delivered from a developers computer to the testers. And they have been successful in delivering software in this manner for 10 years.

The Challenge

The team has heard about the XP practices, and sees opportunities to improve how they work. However, they are in a micromanaging environment of a small company and are pressured to constantly deliver, not invest in their tools and platform.

The Key

What we want to do is provide data for Team Triscuit’s management that shows investing in XP practices will not only make the development team happier, but will save the company money and increase their business productivity.

What we want to do is provide data for Team Triscuit’s management that shows investing in XP practices will not only make the development team happier, but will save the company money and increase their business productivity.

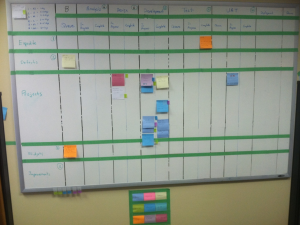

To do this, we’re going to need the team to capture some basic metrics. This is easy to do with an Information Radiator. What we want to capture is the basics of what happens with the flow of work – basically that it comes in, a dev works on it, it waits for a tester, the tester tests it, and then is released to production.

These basics capture the “Value Stream” of the team. We can now watch as work flows through the board. We can also capture basic events about the work. Let’s see what happens.

A Normal Day

The team pulls a story and begins working on it. Because the board is in place, they move the story to the “Development” column. The developer works on the story, but runs into problems because of trying to understand how certain logic in the system runs. She thinks she has it figured out, so does some basic manual testing and creates a build. She then moves the story to the “Ready for Testing” column. The tester picks the build up, but immediately runs into a problem because a library the dev has on her machine wasn’t included in the build. The tester lets the dev know, but before he does, he marks the story with a little dot, indicating a delay.

The dev immediately realizes what happened, and includes the correct library and regenerates the build. The tester gets the new build, and in his testing finds an edge case. He then “rejects” the story for failing a test case, marking the story on the board with a dot indicating it has to move backwards in the flow. The dev is able to discuss the issue with another coworker, and the two of them figure out the issue and push a new build. This time the tester gives the all clear, and the story moves to complete.

Adding It Up

In our scenario here, the story was a “success” because it was finished during the sprint. However, there are clearly a lot of challenges in the flow of the work which wouldn’t otherwise be visible. This back and forth can be quite expensive. But now we can start measuring just how costly it is, and make a business case for it.

Let’s start with automated builds. One of the first issues the team ran into was a missing library in the build because it was done on the developer’s computer. Let’s say we measure this flow over a month, and find that this happens 3 times per week. Each time:

1) The tester’s time was wasted finding the issue (30 minutes)

2) The developer has to context switch back and forth into fixing the issue and back to whatever she was working on (30 minutes)

3) The developer has to actually fix and test whatever is going on (15 minutes) plus actually do a new build (we’ll say 10 minutes, though I’ve seen this as high as 20 hours or more)

4) The tester can now start testing again

So, if the tester can stay busy during the time the dev is fixing it, then we’ve lost 85 minutes. If not, then we’re at 110 minutes. That’s two hours or so 3 times per week. Using the metrics from my article on Shared Resources that’s:

$37.50 * 2 hours * 3x Per Week * 50 weeks = $11,250 per year

Ok, so now let’s look at testing. Clearly there was an issue with complexity in the code base. If the tests had been automated (and fast!), then the developer could have run them herself before moving it to a tester. If we can imagine that every third story had bugs in it that had to go back to a developer, and that it again cost us about 2 hours each time, we could see something like:

$37.50 * 2 hours * 15 times per sprint * 50 weeks = $56,250

So we’re talking about some real money here. We could further extract out the value chain to see how this causes issues down stream, but the above gives us the gist of the situation.

So Now What?

With the data above, even if we don’t use the financial numbers, we have a sense of the very real impact of the practices we’re using. And, more importantly, we have a baseline to measure improvement. After all, if we’re having issues because of manual builds – if we implement automated builds, we should see those issues go down. Likewise, if communication turns out to be an issue, then Pair Programming should cause the cycle time (the amount of time from when work starts until when it is delivered) go down. We don’t just feel better – we’re getting better, and can prove it.

With the data above, even if we don’t use the financial numbers, we have a sense of the very real impact of the practices we’re using. And, more importantly, we have a baseline to measure improvement. After all, if we’re having issues because of manual builds – if we implement automated builds, we should see those issues go down. Likewise, if communication turns out to be an issue, then Pair Programming should cause the cycle time (the amount of time from when work starts until when it is delivered) go down. We don’t just feel better – we’re getting better, and can prove it.

So, if you need to make the case for the adoption of technical practices, think about how you can measure both the qualitative and quantitative impacts and improvements. Because we want the team to be more effective! But if we can show that improvement to the business, you might win yourself an ally as well.

2 thoughts on “Convincing Your CEO to Adopt Extreme Programming”

Comments are closed.